San Francisco, California Oct 29, 2025 (Issuewire.com) - Everest AI, a Silicon Valley-based AI infrastructure startup, today announced the launch of its C1 single board computer, a managed on-premises computing solution designed to provide enterprises with an alternative to expensive cloud dependencies. The announcement was accompanied by an unconventional marketing campaign featuring a music video with lyrics directly challenging major cloud computing providers over pricing practices.

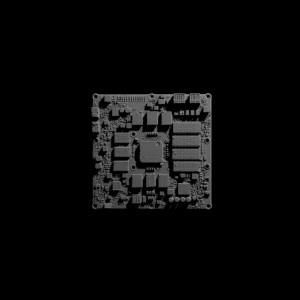

The C1 represents Everest's entry into the managed on-premises computing market, targeting organizations seeking the control and cost predictability of owned infrastructure combined with the ease of deployment typically associated with cloud services. Built on a Qualcomm Snapdragon X2 Elite Extreme ARM64-based architecture, the C1 is optimized for AI inference workloads where local processing can dramatically reduce both latency and ongoing operational costs.

"Cloud providers have positioned themselves as lock-in landlords rather than service providers, and enterprises are tired of unpredictable billing, egress fees, and terms-of-service changes they can't control," said Everest AI CEO Gabriel Saint-Martin. "The C1 gives organizations ownership economics with cloud-like management capabilities, hybrid backup, and cloud failover, the best of both worlds."

The C1 packs significant computing power into a compact 125mm x 100mm form factor:

• Processor: Qualcomm Snapdragon X2 Elite Extreme (X2E-96-100) ARM64 architecture, 18 cores (12 Prime cores @ 4.4 GHz base, 6 Performance cores @ 3.6 GHz), boost to 5.0 GHz (single-core/dual-core)

• Memory: 128GB LPDDR5X-9523 with 192-bit bus, 228 GB/s bandwidth

• AI Performance: Dedicated NPU delivering 80 TOPS (INT8) for AI inference

• GPU: Adreno X2-90 @ 1.85 GHz

• Storage: Dual M.2 NVMe slots

• Cache: 53MB total cache

• Connectivity: Wi-Fi 7, Bluetooth 5.4

• Power: Redundant 2x USB4.0 100W power inputs

• Network: Dual Ethernet (board + IPMI management)

• HyperLink1: 100GB+ node inter-connect

The C1's neural processing unit supports major frameworks including TensorFlow, PyTorch, and ONNX Runtime, with optimized drivers for popular models such as LLaMA, Stable Diffusion, and Whisper. Everest claims the C1 can handle real-time inference for medium-sized language models entirely on-device, eliminating API costs for high-volume applications.

The C1 is aggressively priced to challenge cloud economics:

• Single Unit: $1,199

• Volume Pricing (8+ units): $899 per unit

• Enterprise Pricing: Available for larger deployments

Pre-orders are open now, with first shipments scheduled for Q2 2026. The company plans to ship units in bulk batches, with priority given to the largest enterprise orders.

Each unit includes:

- 3-year hardware warranty

- Lifetime access to Everest's IPMI management software platform

- Remote monitoring and firmware updates

- Fleet orchestration tools

Founded in September 2025, Everest AI is on a mission to democratize on-premises AI computing. The company emerged from stealth with backing from prominent technology investors who share the vision that enterprise AI infrastructure needs alternatives to expensive cloud dependencies. With a team comprising veterans from major cloud providers, semiconductor companies, and AI research labs, Everest brings insider expertise to solving the very problems its founders experienced firsthand.

Unlike traditional hardware vendors, Everest provides continuous software updates, monitoring tools, and remote management capabilities typically associated with cloud services, creating a hybrid model that bridges owned infrastructure and managed services. The company's philosophy centers on ownership economics and transparent pricing.

The C1 launch includes a music video featuring lyrics targeting AWS, Azure, and Google Cloud Platform over egress fees, unexpected terms-of-service changes, and unpredictable billing. The video articulates frustrations that have been simmering in the developer community, with lines like "Cloud's a landlord eating my lunch" and "Your ToS changed while I was sleeping, monthly bills got me weeping."

The provocative campaign represents a calculated bet that authenticity and directness will resonate with technical decision-makers frustrated with cloud cost structures. The music video format is designed to generate organic social media attention and reach audiences that traditional enterprise marketing cannot.

Everest's future roadmap includes plans for a 1U server chassis, the BladeRack, featuring modular cooling, datacenter-optimized management features, fitting up to 18x C1 boards, scheduled for release in late 2026.

For more information, visit www.everest.us.com or contact press@everest.us.com

Media Contact

Everest AI press@everest.us.com 6892472039 2100 41st Ave http://everest.us.com